Reference manual#

This is an overview of all subjects that Quality-time can measure, all metrics that Quality-time can use to measure subjects, and all sources that Quality-time can use to collect data from to measure the metrics. For each supported combination of metric and source, the parameters that can be used to configure the source are listed.

Subjects#

This is an overview of all the subjects that Quality-time can measure. For each subject, the metrics that can be used to measure the subject are listed.

CI-environment#

A continuous integration environment.

Process#

A software development and/or maintenance process.

Quality report#

A software quality report.

Supporting metrics

Software#

A custom software application or component.

Supporting metrics

Metrics#

This is an overview of all the metrics that Quality-time can use to measure subjects. For each metric, the default target, the supported scales, and the default tags are given. In addition, the sources that can be used to collect data from to measure the metric are listed.

Average issue lead time#

The average lead time for changes completed in a certain time period.

Why measure average issue lead time? The shorter the lead time for changes, the sooner the new features can be used. Also, the shorter the lead time for changes, the fewer changes are in progress at the same time. Less context switching is needed and the risk of interfering changes is reduced.

- Default target

≦ 10 days

- Scales

count

- Default tags

process efficiency

Supporting sources

CI-pipeline duration#

The duration of a CI-pipeline.

Why measure cI-pipeline duration? CI-pipelines that take too much time may signal something wrong with the build.

- Default target

≦ 10 minutes

- Scales

count

- Default tags

ci

Supported subjects

Supporting sources

Change failure rate#

The percentage of deployments causing a failure in production.

Why measure change failure rate? The change failure rate is an indicator of the DevOps effectiveness of a team.

How to configure change failure rate? Because this metric is a “rate”, it needs a numerator (the number of failed deployments in the look back period) and a denominator (the number of deployments in the look back period). The metric can be configured with a combination of Jira, and either Jenkins or GitLab CI as source. Jira is used as source for the number of failures (numerator). Either Jenkins or GitLab CI is used as source for the number of deployments (denominator).

When configuring Azure DevOps as source for the metric it is used both for number of failures (numerator) and for number of deployments (denominator). Azure DevOps needs to be added as source only once.

- Default target

≦ 0% of the failed deployments

- Scales

percentage

- Default tags

process efficiency

Supporting sources

Commented out code#

The number of blocks of commented out lines of code.

Why measure commented out code? Code should not be commented out because it bloats the sources and may confuse the reader as to why the code is still there, making the source code harder to understand and maintain. Unused code should be deleted. It can be retrieved from the version control system if needed.

See also

- Default target

≦ 0 blocks

- Scales

count

- Default tags

maintainability

Supported subjects

Supporting sources

Complex units#

The number of units (classes, functions, methods, files) that are too complex.

Why measure complex units? Complex code makes software harder to test and harder to maintain. Complex code is harder to test because there are more execution paths that need to be tested. Complex code is harder to maintain because it is harder to understand and analyze.

See also

- Default target

≦ 0 complex units

- Scales

count (default)

percentage

- Default tags

maintainability

testability

Supported subjects

Supporting sources

Dependencies#

The number of (outdated) dependencies.

Why measure dependencies? Dependencies that are out of date can be considered a form of technical debt. On the one hand, not upgrading a dependency postpones the work of testing the new version. And, if the new version of a dependency has backwards-incompatible changes, it also postpones making adaptations to cater for those changes. On the other hand, upgrading the dependency may fix bugs and vulnerabilities, and unlock new features. Measuring the number of outdated dependencies provides insight into the size of this backlog.

- Default target

≦ 0 dependencies

- Scales

count (default)

percentage

- Default tags

maintainability

Supported subjects

Supporting sources

Duplicated lines#

The number of lines that are duplicated.

Why measure duplicated lines? Duplicate code makes software larger and thus potentially harder to maintain. Also, if the duplicated code contains bugs, they need to be fixed in multiple locations.

- Default target

≦ 0 lines

- Scales

count (default)

percentage

- Default tags

maintainability

Supported subjects

Supporting sources

Failed CI-jobs#

The number of continuous integration jobs or pipelines that have failed.

Why measure failed CI-jobs? Although it is to be expected that CI-jobs or pipelines will fail from time to time, a significant number of failed CI-jobs or pipelines over a longer period of time could be a sign that the continuous integration process is not functioning properly. Also, having many failed CI-jobs or pipelines makes it hard to see that additional jobs or pipelines start failing.

- Default target

≦ 0 CI-jobs

- Scales

count

- Default tags

ci

Supported subjects

Supporting sources

Issues#

The number of issues.

Why measure issues? What exactly issues are, depends on what is available in the source. The issues metric can for example be used to count the number of open bug reports, the number of ready user stories, or the number of overdue customer service requests. For sources that support a query language, the issues to be counted can be specified using the query language of the source.

- Default target

≦ 0 issues

- Scales

count

Supporting sources

Job runs within time period#

The number of job runs within a specified time period.

Why measure job runs within time period? Frequent deployments are associated with smaller, less risky deployments and with lower lead times for new features.

- Default target

≧ 30 CI-job runs

- Scales

count

- Default tags

ci

Supported subjects

Supporting sources

Long units#

The number of units (functions, methods, files) that are too long.

Why measure long units? Long units are deemed harder to maintain.

- Default target

≦ 0 long units

- Scales

count (default)

percentage

- Default tags

maintainability

Supported subjects

Supporting sources

Manual test duration#

The duration of the manual test in minutes.

Why measure manual test duration? Preferably, all regression tests are automated. When this is not feasible, it is good to know how much time it takes to execute the manual tests, since they need to be executed before every release.

- Default target

≦ 0 minutes

- Scales

count

- Default tags

test quality

Supporting sources

Manual test execution#

Measure the number of manual test cases that have not been tested on time.

Why measure manual test execution? Preferably, all regression tests are automated. When this is not feasible, it is good to know whether the manual regression tests have been executed recently.

- Default target

≦ 0 manual test cases

- Scales

count

- Default tags

test quality

Supporting sources

Many parameters#

The number of units (functions, methods, procedures) that have too many parameters.

Why measure many parameters? Units with many parameters are deemed harder to maintain.

- Default target

≦ 0 units with too many parameters

- Scales

count (default)

percentage

- Default tags

maintainability

Supported subjects

Supporting sources

Merge requests#

The number of merge requests.

Why measure merge requests? Merge requests need to be reviewed and approved. This metric allows for measuring the number of merge requests without the required approvals.

How to configure merge requests? In itself, the number of merge requests is not indicative of software quality. However, by setting the parameter “Minimum number of upvotes”, the metric can report on merge requests that have fewer than the minimum number of upvotes. The parameter “Merge request state” can be used to exclude closed merge requests, for example. The parameter “Target branches to include” can be used to further limit the merge requests to only count merge requests that target specific branches, for example the “develop” branch.

- Default target

≦ 0 merge requests

- Scales

count (default)

percentage

- Default tags

ci

Supported subjects

Supporting sources

Metrics#

The number of metrics from one or more quality reports, with specific states and/or tags.

Why measure metrics? Use this metric to monitor other quality reports. For example, count the number of metrics that don’t meet their target value, or count the number of metrics that have been marked as technical debt for more than two months.

How to configure metrics? After adding Quality-time as a source to a “Metrics”-metric, one can configure which statuses to count and which metrics to consider by filtering on report names or identifiers, on metric types, on source types, and on tags.

Note

If the “Metrics” metric is itself part of the set of metrics it counts, a peculiar situation may occur: when you’ve configured the “Metrics” to count red metrics and its target is not met, the metric itself will become red and thus be counted as well. For example, if the target is at most five red metrics, and the number of red metrics increases from five to six, the “Metrics” value will go from five to seven. You can prevent this by making sure the “Metrics” metric is not in the set of counted metrics, for example by putting it in a different report and only count metrics in the other report(s).

- Default target

≦ 0 metrics

- Scales

count (default)

percentage

Supported subjects

Supporting sources

Missing metrics#

The number of metrics that can be added to each report, but have not been added yet.

Why measure missing metrics? Provide an overview of metrics still to be added to the quality report. If metrics will not be added, a reason can be documented.

- Default target

≦ 0 missing metrics

- Scales

count (default)

percentage

Supported subjects

Supporting sources

Performancetest duration#

The duration of the performancetest in minutes.

Why measure performancetest duration? Performance tests, especially endurance tests, may need to run for a minimum duration to give relevant results.

- Default target

≧ 30 minutes

- Scales

count

- Default tags

performance

Supported subjects

Supporting sources

Performancetest stability#

The duration of the performancetest at which throughput or error count increases.

Why measure performancetest stability? When testing endurance, the throughput and error count should remain stable for the complete duration of the performancetest. If throughput or error count starts to increase during the performancetest, this may indicate memory leaks or other resource problems.

- Default target

≧ 100% of the minutes

- Scales

percentage

- Default tags

performance

Supported subjects

Supporting sources

Scalability#

The number of virtual users (or percentage of the maximum number of virtual users) at which ramp-up of throughput breaks.

Why measure scalability? When stress testing, the load on the system-under-test has to increase sufficiently to detect the point at which the system breaks, as indicated by increasing throughput or error counts. If this breakpoint is not detected, the load has not been increased enough.

- Default target

≧ 75 virtual users

- Scales

count (default)

percentage

- Default tags

performance

Supported subjects

Supporting sources

Security warnings#

The number of security warnings about the software.

Why measure security warnings? Monitor security warnings about the software, its source code, dependencies, or infrastructure so vulnerabilities can be fixed before they end up in production.

- Default target

≦ 0 security warnings

- Scales

count

- Default tags

security

Supported subjects

Sentiment#

How are the team members feeling?

Why measure sentiment? Satisfaction is how fulfilled developers feel with their work, team, tools, or culture; well-being is how healthy and happy they are, and how their work impacts it. Measuring satisfaction and well-being can be beneficial for understanding productivity and perhaps even for predicting it. For example, productivity and satisfaction are correlated, and it is possible that satisfaction could serve as a leading indicator for productivity; a decline in satisfaction and engagement could signal upcoming burnout and reduced productivity.

- Default target

≧ 10

- Scales

count

Supported subjects

Supporting sources

Size (LOC)#

The size of the software in lines of code.

Why measure size (LOC)? The size of software is correlated with the effort it takes to maintain it. Lines of code is one of the most widely used metrics to measure size of software.

How to configure size (LOC)? To track the absolute size of the software, set the scale of the metric to ‘count’ and add one or more sources.

To track the relative size of sources, set the scale of the metric to ‘percentage’ and add cloc as source. Make sure

to generate cloc JSON reports with the --by-file option so that filenames are included in the JSON reports. Use the

‘files to include’ field to select the sources that should be compared to the total amount of code. For example, to

measure the relative amount of test code, use the regular expression .*test.*.

Note

Unfortunately, SonarQube can only used to measure the absolute size of the software. SonarQube does not report the sizes of files with test code for all programming languages. Hence it cannot be used to measure the relative size of test code.

- Default target

≦ 30000 lines

- Scales

count (default)

percentage

- Default tags

maintainability

Supported subjects

Supporting sources

Slow transactions#

The number of transactions slower than their target response time.

Why measure slow transactions? Transations slower than their target response time indicate performance problems that need attention.

- Default target

≦ 0 transactions

- Scales

count

- Default tags

performance

Supported subjects

Supporting sources

Software version#

The version number of the software as analyzed by the source.

Why measure software version? Monitor that the version of the software is at least a specific version or get notified when the software version becomes higher than a specific version.

More information Quality-time uses the packaging library (1) to parse version numbers. The packaging library expects version numbers to comply with PEP-440 (2). PEP is an abbrevation for Python Enhancement Proposal, but this PEP is primarily a standard for version numbers. PEP-440 encompasses most of the semantic versioning scheme (3) so version numbers that follow semantic versioning are usually parsed correctly.

- Default target

≧ 1.0

- Scales

version_number

- Default tags

ci

Supported subjects

Supporting sources

Source up-to-dateness#

The number of days since the source was last updated.

Why measure source up-to-dateness? If the information provided by sources is outdated, so will be the metrics in Quality-time. Hence it is important to monitor that sources are up-to-date.

- Default target

≦ 3 days

- Scales

count

- Default tags

ci

Supported subjects

Supporting sources

Source version#

The version number of the source.

Why measure source version? Monitor that the version of a source is at least a specific version or get notified when the version becomes higher than a specific version.

How to configure source version? If you decide with your development team that you are only interested in the major and minor updates of tools and want to ignore any patch updates, then the following settings can be helpful.

Tip

Leave the metric direction default, i.e. a higher version number is better. Set the metric target to the first two digits of the latest available software version. So if the latest is 3.9.4, set it to 3.9. Set the metric near target to only the first digit, so in this example that will be 3.

The result will be that when there is a minor update, this metric will turn yellow. So, suppose the version of your tool is at version 2.12.15 and the latest version available is 2.13.2, then this metric will turn yellow. If there is only a patch update, the metric will stay green. If there is a major update, the metric will turn red. So when we have 2.9.3 and the version available is 3.9.1, then the metric will turn red.

More information Quality-time uses the packaging library (1) to parse version numbers. The packaging library expects version numbers to comply with PEP-440 (2). PEP is an abbrevation for Python Enhancement Proposal, but this PEP is primarily a standard for version numbers. PEP-440 encompasses most of the semantic versioning scheme (3) so version numbers that follow semantic versioning are usually parsed correctly.

- Default target

≧ 1.0

- Scales

version_number

- Default tags

ci

Supported subjects

Suppressed violations#

The number of violations suppressed in the source.

Why measure suppressed violations? Some tools allow for suppression of violations. Having the number of suppressed violations violations visible in Quality-time allows for a double check of the suppressions.

- Default target

≦ 0 suppressed violations

- Scales

count (default)

percentage

- Default tags

maintainability

Supported subjects

Supporting sources

Test branch coverage#

The number of code branches not covered by tests.

Why measure test branch coverage? Code branches not covered by tests may contain bugs and signal incomplete tests.

- Default target

≦ 0 uncovered branches

- Scales

count (default)

percentage

- Default tags

test quality

Supported subjects

Supporting sources

Test cases#

The number of test cases.

Why measure test cases? Track the test results of test cases so there is traceability from the test cases, defined in Jira, to the test results in test reports produced by tools such as Robot Framework or Junit.

How to configure test cases? The test cases metric reports on the number of test cases, and their test results. The test case metric is different than other metrics because it combines data from two types of sources: it needs one or more sources for the test cases, and one or more sources for the test results. The test case metric then matches the test results with the test cases.

Currently, only Jira is supported as source for the test cases. JUnit, TestNG, and Robot Framework are supported as source for the test results. So, to configure the test cases metric, you need to add at least one Jira source and one JUnit, TestNG, Robot Framework source. In addition, to allow the test case metric to match test cases from Jira with test results from the JUnit, TestNG, or Robot Framework XML files, the test results should mention Jira issue keys in their title or description.

Suppose you have configured Jira with the query: project = "My Project" and type = "Logical Test Case" and this

results in these test cases:

Key |

Summary |

|---|---|

MP-1 |

Test case 1 |

MP-2 |

Test case 2 |

MP-3 |

Test case 3 |

MP-4 |

Test case 4 |

Also suppose your JUnit XML has the following test results:

<testsuite tests="5" errors="0" failures="1" skipped="1">

<testcase name="MP-1; step 1">

<failure />

</testcase>

<testcase name="MP-1; step 2">

<skipped />

</testcase>

<testcase name="MP-2">

<skipped />

</testcase>

<testcase name="MP-3; step 1"/>

<testcase name="MP-3; step 2"/>

</testsuite>

The test case metric will combine the JUnit XML file with the test cases from Jira and report one failed, one skipped, one passed, and one untested test case:

Key |

Summary |

Test result |

|---|---|---|

MP-1 |

Test case 1 |

failed |

MP-2 |

Test case 2 |

skipped |

MP-3 |

Test case 3 |

passed |

MP-4 |

Test case 4 |

untested |

If multiple test results in the JUnit, TestNG, or Robot Framework XML file map to one Jira test case (as with MP-1 and MP-3 above), the ‘worst’ test result is reported. Possible test results from worst to best are: errored, failed, skipped, and passed. Test cases not found in the test results are listed as untested (as with MP-4 above).

- Default target

≧ 0 test cases

- Scales

count (default)

percentage

- Default tags

test quality

Supported subjects

Supporting sources

Test line coverage#

The number of lines of code not covered by tests.

Why measure test line coverage? Code lines not covered by tests may contain bugs and signal incomplete tests.

- Default target

≦ 0 uncovered lines

- Scales

count (default)

percentage

- Default tags

test quality

Supported subjects

Supporting sources

Tests#

The number of tests.

Why measure tests? Keep track of the total number of tests or the number of tests with different states, for example failed or errored.

- Default target

≧ 0 tests

- Scales

count (default)

percentage

- Default tags

test quality

Supported subjects

Time remaining#

The number of days remaining until a date in the future.

Why measure time remaining? Keep track of the time remaining until for example a release date, the end date of a policy, or the next team building retreat.

- Default target

≧ 28 days

- Scales

count

Supported subjects

Supporting sources

Todo and fixme comments#

The number of todo and fixme comments in source code.

Why measure todo and fixme comments? Code should not contain traces of unfinished work. The presence of todo and fixme comments may be indicative of technical debt or hidden defects.

See also

- Default target

≦ 0 todo and fixme comments

- Scales

count

- Default tags

maintainability

Supported subjects

Supporting sources

Unmerged branches#

The number of branches that have not been merged to the default branch.

Why measure unmerged branches? It is strange if branches have had no activity for a while and have not been merged to the default branch. Maybe commits have been cherry picked, or maybe the work has been postponed, but it also sometimes happen that someone simply forgets to merge the branch.

How to configure unmerged branches? To change how soon Quality-time should consider branches to be inactive, use the parameter “Number of days since last commit after which to consider branches inactive”.

What exactly is the default branch is configured in GitLab or Azure DevOps. If you want to use a different branch as default branch, you need to configure this in the source, see the documentation for GitLab or Azure DevOps.

- Default target

≦ 0 branches

- Scales

count

- Default tags

ci

Supported subjects

Supporting sources

Unused CI-jobs#

The number of continuous integration jobs that are unused.

Why measure unused CI-jobs? Removing unused, obsolete CI-jobs helps to keep a clear overview of the relevant CI-jobs.

- Default target

≦ 0 CI-jobs

- Scales

count

- Default tags

ci

Supported subjects

Supporting sources

User story points#

The total number of points of a selection of user stories.

Why measure user story points? Keep track of the number of user story points so the team has sufficient ‘ready’ stories to plan the next sprint.

- Default target

≧ 100 user story points

- Scales

count

- Default tags

process efficiency

Supporting sources

Velocity#

The average number of user story points delivered in recent sprints.

Why measure velocity? Keep track of the velocity so the team knows how many story points need at least be ‘ready’ to plan the next sprint

- Default target

≧ 20 user story points per sprint

- Scales

count

- Default tags

process efficiency

Supported subjects

Supporting sources

Violation remediation effort#

The amount of effort it takes to remediate violations.

Why measure violation remediation effort? To better plan the work to remediate violations, it is helpful to have an estimate of the amount of effort it takes to remediate them.

- Default target

≦ 60 minutes

- Scales

count

- Default tags

maintainability

Supported subjects

Supporting sources

Violations#

The number of violations of rules in the software.

Why measure violations? Depending on what kind of rules or guideliens the source checks, violations may pose a risk for different quality characteristics of the software. For example, the more programming rules are violated, the harder it may be to understand and maintain the software (1, 2). And the more accessibility guidelines are violated, the harder it may be for users to use the software (3).

See also

- Default target

≦ 0 violations

- Scales

count

Supported subjects

Supporting sources

Sources#

This is an overview of all the sources that Quality-time can use to measure metrics. For each source, the metrics that the source can measure are listed. Also, a link to the source’s own documentation is provided.

Anchore#

Anchore image scan analysis report in JSON format.

Supported metrics

Anchore Jenkins plugin#

A Jenkins job with an Anchore report produced by the Anchore Jenkins plugin.

Supported metrics

Axe CSV#

An Axe accessibility report in CSV format.

Supported metrics

See also

Axe HTML reporter#

Creates an HTML report from the axe-core library AxeResults object.

Supported metrics

Axe-core#

Axe is an accessibility testing engine for websites and other HTML-based user interfaces.

Supported metrics

Azure DevOps Server#

Azure DevOps Server (formerly known as Team Foundation Server) by Microsoft provides source code management, reporting, requirements management, project management, automated builds, testing and release management.

Supported metrics

Bandit#

Bandit is a tool designed to find common security issues in Python code.

Supported metrics

See also

Calendar date#

Specify a specific date in the past or the future. Can be used to, for example, warn when it is time for the next security test.

Supported metrics

Cargo Audit#

Cargo Audit is a linter for Rust Cargo.lock files for crates.

Supported metrics

Checkmarx CxSAST#

Static analysis software to identify security vulnerabilities in both custom code and open source components.

Supported metrics

Cobertura#

Cobertura is a free Java tool that calculates the percentage of code accessed by tests.

Supported metrics

Cobertura Jenkins plugin#

Jenkins plugin for Cobertura, a free Java tool that calculates the percentage of code accessed by tests.

Supported metrics

Composer#

A Dependency Manager for PHP.

Supported metrics

See also

Dependency-Track#

Dependency-Track is a component analysis platform that allows organizations to identify and reduce risk in the software supply chain.

Supported metrics

See also

Gatling#

Gatling is an open-source load testing solution, designed for continuous load testing and development pipeline integration.

Supported metrics

See also

GitLab#

GitLab provides Git-repositories, wiki’s, issue-tracking and continuous integration/continuous deployment pipelines.

Note

Some metric sources are documents in JSON, XML, CSV, or HTML format. Examples include JUnit XML reports, JaCoCo XML reports and Axe CSV reports. Usually, you add a JUnit (or JaCoCo, or Axe…) source and then simply configure the same URL that you use to access the document via the browser. Unfortunately, this does not work if the document is stored in GitLab. In that case, you still use the JUnit (or JaCoCo, or Axe…) source, but provide a GitLab API URL as URL. Depending on where the document is stored in GitLab, there are two scenarios; the source is a build artifact of a GitLab CI pipeline, or the source is stored in a GitLab repository:

When the metric source is a build artifact of a GitLab CI pipeline, use URLs of the following format:

https://<gitlab-server>/api/v4/projects/<project-id>/jobs/artifacts/<branch>/raw/<path>/<to>/<file-name>?job=<job-name>The project id can be found under the project’s general settings.

If the repository is private, you also need to enter an personal access token with the scope

read_apiin the private token field.When the metric source is a file stored in a GitLab repository, use URLs of the following format:

https://<gitlab-server>/api/v4/projects/<project-id>/repository/files/<file-path-with-slashes-%2F-encoded>/raw?ref=<branch>The project id can be found under the project’s general settings.

If the repository is private, you also need to enter an personal access token with the scope

read_repositoryin the private token field.

Supported metrics

See also

Harbor#

Harbor is an open source registry that secures artifacts with policies and role-based access control, ensures images are scanned and free from vulnerabilities, and signs images as trusted.

Supported metrics

See also

Harbor JSON#

Harbor is an open source registry that secures artifacts with policies and role-based access control, ensures images are scanned and free from vulnerabilities, and signs images as trusted. Use Harbor JSON as source for accessing vulnerability reports downloaded from the Harbor API in JSON format.

Supported metrics

See also

JMeter CSV#

Apache JMeter application is open source software, a 100% pure Java application designed to load test functional behavior and measure performance.

Supported metrics

See also

JMeter JSON#

Apache JMeter application is open source software, a 100% pure Java application designed to load test functional behavior and measure performance.

Supported metrics

See also

JSON file with security warnings#

A generic vulnerability report with security warnings in JSON format.

In some cases, there are security vulnerabilities not found by automated tools. Quality-time has the ability to parse security warnings from JSON files with a generic format.

The JSON format consists of an object with one key vulnerabilities. The value should be a list of vulnerabilities.

Each vulnerability is an object with three keys: title, description, and severity. The title and description

values should be strings. The severity is also a string and can be either low, medium, or high.

Example generic JSON file:

{

"vulnerabilities": [

{

"title": "ISO27001:2013 A9 Insufficient Access Control",

"description": "The Application does not enforce Two-Factor Authentication and therefore does not satisfy security best practices.",

"severity": "high"

},

{

"title": "Threat Model Finding: Uploading Malicious Files",

"description": "An attacker can upload malicious files with low privileges that can perform direct API calls and perform unwanted mutations or see unauthorized information.",

"severity": "medium"

}

]

}

Supported metrics

JUnit XML report#

Test reports in the JUnit XML format.

Supported metrics

See also

JaCoCo#

JaCoCo is an open-source tool for measuring and reporting Java code coverage.

Supported metrics

See also

JaCoCo Jenkins plugin#

A Jenkins job with a JaCoCo coverage report produced by the JaCoCo Jenkins plugin.

Supported metrics

See also

Jenkins#

Jenkins is an open source continuous integration/continuous deployment server.

Note

Some metric sources are documents in JSON, XML, CSV, or HTML format. Examples include JUnit XML reports, JaCoCo XML reports and Axe CSV reports. Usually, you add a JUnit (or JaCoCo, or Axe…) source and then simply configure the same URL that you use to access the document via the browser. If the document is stored in Jenkins and Quality-time needs to be authorized to access resources in Jenkins, there are two options:

Configure a Jenkins user and password. The username of the Jenkins user needs to be entered in the “Username” field and the password in the “Password” field.

Configure a Jenkins user and a private token of that user. The username of the Jenkins user needs to be entered in the “Username” field and the private token in the “Password” field.

Supported metrics

See also

Jenkins test report#

A Jenkins job with test results.

Supported metrics

See also

Jira#

Jira is a proprietary issue tracker developed by Atlassian supporting bug tracking and agile project management.

Supported metrics

Manual number#

A number entered manually by a Quality-time user.

The manual number source supports all metric types that take a number as value. Because users have to keep the value up to date by hand, this source is only meant to be used as a temporary solution for when no automated source is available yet. For example, when the results of a security audit are only available in a PDF-report, a ‘security warnings’ metric can be added with the number of findings as manual number source.

Supported metrics

NCover#

A .NET code coverage solution.

Supported metrics

See also

OJAudit#

An Oracle JDeveloper program to audit Java code against JDeveloper’s audit rules.

Supported metrics

OWASP Dependency-Check#

OWASP Dependency-Check is a utility that identifies project dependencies and checks if there are any known, publicly disclosed, vulnerabilities.

Supported metrics

OWASP ZAP#

The OWASP Zed Attack Proxy (ZAP) can help automatically find security vulnerabilities in web applications while the application is being developed and tested.

Supported metrics

See also

OpenVAS#

OpenVAS (Open Vulnerability Assessment System) is a software framework of several services and tools offering vulnerability scanning and vulnerability management.

Supported metrics

See also

Performancetest-runner#

An open source tool to run performancetests and create performancetest reports.

Supported metrics

Pyupio Safety#

Safety checks Python dependencies for known security vulnerabilities.

Supported metrics

See also

Quality-time#

Quality report software for software development and maintenance.

Supported metrics

See also

Robot Framework#

Robot Framework is a generic open source automation framework for acceptance testing, acceptance test driven development, and robotic process automation.

Supported metrics

See also

Robot Framework Jenkins plugin#

A Jenkins plugin for Robot Framework, a generic open source automation framework for acceptance testing, acceptance test driven development, and robotic process automation.

Supported metrics

See also

SARIF#

A Static Analysis Results Interchange Format (SARIF) vulnerability report in JSON format.

Supported metrics

Snyk#

Snyk vulnerability report in JSON format.

Supported metrics

SonarQube#

SonarQube is an open-source platform for continuous inspection of code quality to perform automatic reviews with static analysis of code to detect bugs, code smells, and security vulnerabilities on 20+ programming languages.

Supported metrics

See also

TestNG#

Test reports in the TestNG XML format.

Supported metrics

See also

Trello#

Trello is a collaboration tool that organizes projects into boards.

Supported metrics

See also

Trivy JSON#

A Trivy vulnerability report in JSON format.

Supported metrics

cloc#

cloc is an open-source tool for counting blank lines, comment lines, and physical lines of source code in many programming languages.

Supported metrics

See also

npm#

npm is a package manager for the JavaScript programming language.

Supported metrics

See also

pip#

pip is the package installer for Python. You can use pip to install packages from the Python Package Index and other indexes.

Supported metrics

See also

Metric-source combinations#

This is an overview of all supported combinations of metrics and sources. For each combination of metric and source, the mandatory and optional parameters are listed that can be used to configure the source to measure the metric. If Quality-time needs to make certain assumptions about the source, for example which SonarQube rules to use to count long methods, then these assumptions are listed under ‘configurations’.

Average issue lead time from Azure DevOps Server#

Azure DevOps Server can be used to measure average issue lead time.

Mandatory parameters#

URL including organization and project. URL of the Azure DevOps instance, with port if necessary, and with organization and project. For example: ‘https://dev.azure.com/{organization}/{project}’.

Optional parameters#

Issue query in WIQL (Work Item Query Language). This should only contain the WHERE clause of a WIQL query, as the selected fields are static. For example, use the following clause to hide issues marked as done: “[System.State] <> ‘Done’”. See https://docs.microsoft.com/en-us/azure/devops/boards/queries/wiql-syntax?view=azure-devops.

Number of days to look back for work items. Work items are selected if they are completed and have been updated within the number of days configured. The default value is:

90.Private token.

Average issue lead time from Jira#

Jira can be used to measure average issue lead time.

Mandatory parameters#

Issue query in JQL (Jira Query Language).

URL. URL of the Jira instance, with port if necessary. For example, ‘https://jira.example.org’.

Optional parameters#

Number of days to look back for selecting issues. Issues are selected if they are completed and have been updated within the number of days configured. The default value is:

90.Password for basic authentication.

Private token.

Username for basic authentication.

Change failure rate from Azure DevOps Server#

Azure DevOps Server can be used to measure change failure rate.

Mandatory parameters#

URL including organization and project. URL of the Azure DevOps instance, with port if necessary, and with organization and project. For example: ‘https://dev.azure.com/{organization}/{project}’.

Optional parameters#

Issue query in WIQL (Work Item Query Language). This should only contain the WHERE clause of a WIQL query, as the selected fields are static. For example, use the following clause to hide issues marked as done: “[System.State] <> ‘Done’”. See https://docs.microsoft.com/en-us/azure/devops/boards/queries/wiql-syntax?view=azure-devops.

Number of days to look back for selecting pipeline runs. The default value is:

90.Number of days to look back for work items. Work items are selected if they are completed and have been updated within the number of days configured. The default value is:

90.Pipelines to ignore (regular expressions or pipeline names). Pipelines to ignore can be specified by pipeline name or by regular expression. Use {folder name}/{pipeline name} for the names of pipelines in folders.

Pipelines to include (regular expressions or pipeline names). Pipelines to include can be specified by pipeline name or by regular expression. Use {folder name}/{pipeline name} for the names of pipelines in folders.

Private token.

Change failure rate from Jenkins#

Jenkins can be used to measure change failure rate.

Mandatory parameters#

URL. URL of the Jenkins instance, with port if necessary, but without path. For example, ‘https://jenkins.example.org’.

Optional parameters#

Jobs to ignore (regular expressions or job names). Jobs to ignore can be specified by job name or by regular expression. Use {parent job name}/{child job name} for the names of nested jobs.

Jobs to include (regular expressions or job names). Jobs to include can be specified by job name or by regular expression. Use {parent job name}/{child job name} for the names of nested jobs.

Number of days to look back for selecting job builds. The default value is:

90.Password or API token for basic authentication.

Username for basic authentication.

Change failure rate from Jira#

Jira can be used to measure change failure rate.

Mandatory parameters#

Issue query in JQL (Jira Query Language).

URL. URL of the Jira instance, with port if necessary. For example, ‘https://jira.example.org’.

Optional parameters#

Number of days to look back for selecting issues. Issues are selected if they are completed and have been updated within the number of days configured. The default value is:

90.Password for basic authentication.

Private token.

Username for basic authentication.

Change failure rate from GitLab#

GitLab can be used to measure change failure rate.

Mandatory parameters#

GitLab instance URL. URL of the GitLab instance, with port if necessary, but without path. For example, ‘https://gitlab.com’.

Project (name with namespace or id).

Optional parameters#

Branches and tags to ignore (regular expressions, branch names or tag names).

Jobs to ignore (regular expressions or job names). Jobs to ignore can be specified by job name or by regular expression.

Number of days to look back for selecting pipeline jobs. The default value is:

90.Private token (with read_api scope).

Commented out code from Manual number#

Manual number can be used to measure commented out code.

Mandatory parameters#

Number. The default value is:

0.

Commented out code from SonarQube#

SonarQube can be used to measure commented out code.

Mandatory parameters#

Project key. The project key can be found by opening the project in SonarQube and looking at the bottom of the grey column on the right.

URL. URL of the SonarQube instance, with port if necessary, but without path. For example, ‘https://sonarcloud.io’.

Optional parameters#

Branch (only supported by commercial SonarQube editions). The default value is:

master.Private token.

Configurations:

Rules used to detect commented out code:

abap:S125

c:S125

cpp:S125

csharpsquid:S125

flex:CommentedCode

java:S125

javascript:S125

kotlin:S125

objc:S125

php:S125

plsql:S125

python:S125

scala:S125

swift:S125

tsql:S125

typescript:S125

Web:AvoidCommentedOutCodeCheck

xml:S125

Complex units from Manual number#

Manual number can be used to measure complex units.

Mandatory parameters#

Number. The default value is:

0.

Complex units from SonarQube#

SonarQube can be used to measure complex units.

Mandatory parameters#

Project key. The project key can be found by opening the project in SonarQube and looking at the bottom of the grey column on the right.

URL. URL of the SonarQube instance, with port if necessary, but without path. For example, ‘https://sonarcloud.io’.

Optional parameters#

Branch (only supported by commercial SonarQube editions). The default value is:

master.Private token.

Configurations:

Rules used to detect complex units:

abap:S1541

abap:S3776

c:S1541

c:S3776

cpp:S1541

cpp:S3776

csharpsquid:S1541

csharpsquid:S3776

flex:FunctionComplexity

go:S3776

java:S1541

java:S3776

javascript:S1541

javascript:S3776

kotlin:S3776

objc:S1541

objc:S3776

php:S1541

php:S3776

plsql:PlSql.FunctionAndProcedureComplexity

python:FunctionComplexity

python:S3776

ruby:S3776

scala:S3776

swift:S1541

swift:S3776

typescript:S1541

typescript:S3776

vbnet:S1541

vbnet:S3776

Dependencies from Composer#

Composer can be used to measure dependencies.

Mandatory parameters#

URL to a Composer ‘outdated’ report in JSON format or to a zip with Composer ‘outdated’ reports in JSON format.

Optional parameters#

Latest version statuses. Limit which latest version statuses to show. The status ‘safe update possible’ means that based on semantic versioning the update should be backwards compatible. This parameter is multiple choice. Possible statuses are:

safe update possible,unknown,up-to-date,update possible. The default value is: all statuses.Password for basic authentication.

Private token.

URL to a Composer ‘outdated’ report in a human readable format. If provided, users clicking the source URL will visit this URL instead of the Composer ‘outdated’ report in JSON format.

Username for basic authentication.

Dependencies from Dependency-Track#

Dependency-Track can be used to measure dependencies.

Mandatory parameters#

URL of the Dependency-Track API. URL of the Dependency-Track API, with port if necessary, but without path. For example, ‘https://api.dependencytrack.example.org’.

Optional parameters#

API key.

Latest version statuses. Limit which latest version statuses to show. This parameter is multiple choice. Possible statuses are:

unknown,up-to-date,update possible. The default value is: all statuses.URL of the Dependency-Track instance. URL of the Dependency-Track instance, with port if necessary, but without path. For example, ‘https://www.dependencytrack.example.org’. If provided, users clicking the source URL will visit this URL instead of the Dependency-Track API.

Dependencies from Manual number#

Manual number can be used to measure dependencies.

Mandatory parameters#

Number. The default value is:

0.

Dependencies from npm#

npm can be used to measure dependencies.

Tip

To generate the list of outdated dependencies, run:

npm outdated --all --long --json > npm-outdated.json

To run npm outdated with Docker, use:

docker run --rm -v "$SRC":/work -w /work node:lts npm outdated --all --long --json > outdated.json"

Mandatory parameters#

URL to a npm ‘outdated’ report in JSON format or to a zip with npm ‘outdated’ reports in JSON format.

Optional parameters#

Password for basic authentication.

Private token.

URL to a npm ‘outdated’ report in a human readable format. If provided, users clicking the source URL will visit this URL instead of the npm ‘outdated’ report in JSON format.

Username for basic authentication.

Dependencies from OWASP Dependency-Check#

OWASP Dependency-Check can be used to measure dependencies.

Mandatory parameters#

URL to an OWASP Dependency-Check report in XML format or to a zip with OWASP Dependency-Check reports in XML format.

Optional parameters#

Parts of file paths to ignore (regular expressions). Parts of file paths to ignore can be specified by regular expression. The parts of file paths that match one or more of the regular expressions are removed. If, after applying the regular expressions, multiple warnings are the same only one is reported.

Password for basic authentication.

Private token.

URL to an OWASP Dependency-Check report in a human readable format. If provided, users clicking the source URL will visit this URL instead of the OWASP Dependency-Check report in XML format.

Username for basic authentication.

Dependencies from pip#

pip can be used to measure dependencies.

Tip

To generate the list of outdated packages in JSON format, run:

python -m pip list --outdated --format=json > pip-outdated.json

Mandatory parameters#

URL to a pip ‘outdated’ report in JSON format or to a zip with pip ‘outdated’ reports in JSON format.

Optional parameters#

Password for basic authentication.

Private token.

URL to a pip ‘outdated’ report in a human readable format. If provided, users clicking the source URL will visit this URL instead of the pip ‘outdated’ report in JSON format.

Username for basic authentication.

Duplicated lines from Manual number#

Manual number can be used to measure duplicated lines.

Mandatory parameters#

Number. The default value is:

0.

Duplicated lines from SonarQube#

SonarQube can be used to measure duplicated lines.

Mandatory parameters#

Project key. The project key can be found by opening the project in SonarQube and looking at the bottom of the grey column on the right.

URL. URL of the SonarQube instance, with port if necessary, but without path. For example, ‘https://sonarcloud.io’.

Optional parameters#

Branch (only supported by commercial SonarQube editions). The default value is:

master.Private token.

Failed CI-jobs from Azure DevOps Server#

Azure DevOps Server can be used to measure failed ci-jobs.

Mandatory parameters#

URL including organization and project. URL of the Azure DevOps instance, with port if necessary, and with organization and project. For example: ‘https://dev.azure.com/{organization}/{project}’.

Optional parameters#

Failure types. Limit which failure types to count as failed. This parameter is multiple choice. Possible failure types are:

canceled,failed,no result,partially succeeded. The default value is: all failure types.Pipelines to ignore (regular expressions or pipeline names). Pipelines to ignore can be specified by pipeline name or by regular expression. Use {folder name}/{pipeline name} for the names of pipelines in folders.

Pipelines to include (regular expressions or pipeline names). Pipelines to include can be specified by pipeline name or by regular expression. Use {folder name}/{pipeline name} for the names of pipelines in folders.

Private token.

Failed CI-jobs from Jenkins#

Jenkins can be used to measure failed ci-jobs.

To authorize Quality-time for (non-public resources in) Jenkins, you can either use a username and password or a username and API token. Note that, unlike other sources, when using the API token Jenkins also requires the username to which the token belongs.

Mandatory parameters#

URL. URL of the Jenkins instance, with port if necessary, but without path. For example, ‘https://jenkins.example.org’.

Optional parameters#

Failure types. Limit which failure types to count as failed. This parameter is multiple choice. Possible failure types are:

Aborted,Failure,Not built,Unstable. The default value is: all failure types.Jobs to ignore (regular expressions or job names). Jobs to ignore can be specified by job name or by regular expression. Use {parent job name}/{child job name} for the names of nested jobs.

Jobs to include (regular expressions or job names). Jobs to include can be specified by job name or by regular expression. Use {parent job name}/{child job name} for the names of nested jobs.

Password or API token for basic authentication.

Username for basic authentication.

Failed CI-jobs from GitLab#

GitLab can be used to measure failed ci-jobs.

Mandatory parameters#

GitLab instance URL. URL of the GitLab instance, with port if necessary, but without path. For example, ‘https://gitlab.com’.

Project (name with namespace or id).

Optional parameters#

Branches and tags to ignore (regular expressions, branch names or tag names).

Failure types. Limit which failure types to count as failed. This parameter is multiple choice. Possible failure types are:

canceled,failed,skipped. The default value is: all failure types.Jobs to ignore (regular expressions or job names). Jobs to ignore can be specified by job name or by regular expression.

Number of days to look back for selecting pipeline jobs. The default value is:

90.Private token (with read_api scope).

Failed CI-jobs from Manual number#

Manual number can be used to measure failed ci-jobs.

Mandatory parameters#

Number. The default value is:

0.

Issues from Azure DevOps Server#

Azure DevOps Server can be used to measure issues.

Mandatory parameters#

URL including organization and project. URL of the Azure DevOps instance, with port if necessary, and with organization and project. For example: ‘https://dev.azure.com/{organization}/{project}’.

Optional parameters#

Issue query in WIQL (Work Item Query Language). This should only contain the WHERE clause of a WIQL query, as the selected fields are static. For example, use the following clause to hide issues marked as done: “[System.State] <> ‘Done’”. See https://docs.microsoft.com/en-us/azure/devops/boards/queries/wiql-syntax?view=azure-devops.

Private token.

Issues from Jira#

Jira can be used to measure issues.

Mandatory parameters#

Issue query in JQL (Jira Query Language).

URL. URL of the Jira instance, with port if necessary. For example, ‘https://jira.example.org’.

Optional parameters#

Password for basic authentication.

Private token.

Username for basic authentication.

Issues from Manual number#

Manual number can be used to measure issues.

Mandatory parameters#

Number. The default value is:

0.

Issues from Trello#

Trello can be used to measure issues.

Mandatory parameters#

Board (title or id).

URL. The default value is:

https://trello.com.

Optional parameters#

API key.

See also

Cards to count. This parameter is multiple choice. Possible cards are:

inactive,overdue. The default value is: all cards.Lists to ignore (title or id).

Number of days without activity after which to consider cards inactive. The default value is:

30.Token.

See also

Job runs within time period from Azure DevOps Server#

Azure DevOps Server can be used to measure job runs within time period.

Mandatory parameters#

URL including organization and project. URL of the Azure DevOps instance, with port if necessary, and with organization and project. For example: ‘https://dev.azure.com/{organization}/{project}’.

Optional parameters#

Number of days to look back for selecting pipeline runs. The default value is:

90.Pipelines to ignore (regular expressions or pipeline names). Pipelines to ignore can be specified by pipeline name or by regular expression. Use {folder name}/{pipeline name} for the names of pipelines in folders.

Pipelines to include (regular expressions or pipeline names). Pipelines to include can be specified by pipeline name or by regular expression. Use {folder name}/{pipeline name} for the names of pipelines in folders.

Private token.

Job runs within time period from GitLab#

GitLab can be used to measure job runs within time period.

Mandatory parameters#

GitLab instance URL. URL of the GitLab instance, with port if necessary, but without path. For example, ‘https://gitlab.com’.

Project (name with namespace or id).

Optional parameters#

Branches and tags to ignore (regular expressions, branch names or tag names).

Jobs to ignore (regular expressions or job names). Jobs to ignore can be specified by job name or by regular expression.

Number of days to look back for selecting pipeline jobs. The default value is:

90.Private token (with read_api scope).

Job runs within time period from Jenkins#

Jenkins can be used to measure job runs within time period.

Mandatory parameters#

URL. URL of the Jenkins instance, with port if necessary, but without path. For example, ‘https://jenkins.example.org’.

Optional parameters#

Jobs to ignore (regular expressions or job names). Jobs to ignore can be specified by job name or by regular expression. Use {parent job name}/{child job name} for the names of nested jobs.

Jobs to include (regular expressions or job names). Jobs to include can be specified by job name or by regular expression. Use {parent job name}/{child job name} for the names of nested jobs.

Number of days to look back for selecting job builds. The default value is:

90.Password or API token for basic authentication.

Username for basic authentication.

Job runs within time period from Manual number#

Manual number can be used to measure job runs within time period.

Mandatory parameters#

Number. The default value is:

0.

Size (LOC) from cloc#

cloc can be used to measure size (loc).

Mandatory parameters#

URL to a cloc report in JSON format or to a zip with cloc reports in JSON format.

Optional parameters#

Files to include (regular expressions or file names). Note that filtering files only works when the cloc report is generated with the –by-file option.

Languages to ignore (regular expressions or language names).

Password for basic authentication.

Private token.

URL to a cloc report in a human readable format. If provided, users clicking the source URL will visit this URL instead of the cloc report in JSON format.

Username for basic authentication.

Size (LOC) from Manual number#

Manual number can be used to measure size (loc).

Mandatory parameters#

Number. The default value is:

0.

Size (LOC) from SonarQube#

SonarQube can be used to measure size (loc).

Mandatory parameters#

Project key. The project key can be found by opening the project in SonarQube and looking at the bottom of the grey column on the right.

URL. URL of the SonarQube instance, with port if necessary, but without path. For example, ‘https://sonarcloud.io’.

Optional parameters#

Branch (only supported by commercial SonarQube editions). The default value is:

master.Languages to ignore (regular expressions or language names).

Lines to count. Either count all lines including lines with comments or only count lines with code, excluding comments. Note: it’s possible to ignore specific languages only when counting lines with code. This is a SonarQube limitation. This parameter is single choice. Possible lines to count are:

all lines,lines with code. The default value is:lines with code.Private token.

Long units from Manual number#

Manual number can be used to measure long units.

Mandatory parameters#

Number. The default value is:

0.

Long units from SonarQube#

SonarQube can be used to measure long units.

Mandatory parameters#

Project key. The project key can be found by opening the project in SonarQube and looking at the bottom of the grey column on the right.

URL. URL of the SonarQube instance, with port if necessary, but without path. For example, ‘https://sonarcloud.io’.

Optional parameters#

Branch (only supported by commercial SonarQube editions). The default value is:

master.Private token.

Configurations:

Rules used to detect long units:

abap:S104

c:S104

c:S1151

c:S138

cpp:S104

cpp:S1151

cpp:S1188

cpp:S138

cpp:S6184

csharpsquid:S104

csharpsquid:S1151

csharpsquid:S138

flex:S1151

flex:S138

go:S104

go:S1151

go:S138

java:S104

java:S1151

java:S1188

java:S138

java:S2972

java:S5612

javascript:S104

javascript:S138

kotlin:S104

kotlin:S1151

kotlin:S138

kotlin:S5612

objc:S104

objc:S1151

objc:S138

php:S104

php:S1151

php:S138

php:S2042

plsql:S104

plsql:S1151

python:S104

python:S138

ruby:S104

ruby:S1151

ruby:S138

scala:S104

scala:S1151

scala:S138

swift:S104

swift:S1151

swift:S1188

swift:S138

swift:S2042

tsql:S104

tsql:S1151

tsql:S138

typescript:S104

typescript:S138

vbnet:S104

vbnet:S1151

vbnet:S138

Web:FileLengthCheck

Web:LongJavaScriptCheck

Manual test duration from Jira#

Jira can be used to measure manual test duration.

Mandatory parameters#

Issue query in JQL (Jira Query Language).

Manual test duration field (name or id).

URL. URL of the Jira instance, with port if necessary. For example, ‘https://jira.example.org’.

Optional parameters#

Password for basic authentication.

Private token.

Username for basic authentication.

Manual test duration from Manual number#

Manual number can be used to measure manual test duration.

Mandatory parameters#

Number. The default value is:

0.

Manual test execution from Jira#

Jira can be used to measure manual test execution.

Mandatory parameters#

Default expected manual test execution frequency (days). Specify how often the manual tests should be executed. For example, if the sprint length is three weeks, manual tests should be executed at least once every 21 days. The default value is:

21.Issue query in JQL (Jira Query Language).

URL. URL of the Jira instance, with port if necessary. For example, ‘https://jira.example.org’.

Optional parameters#

Manual test execution frequency field (name or id).

Password for basic authentication.

Private token.

Username for basic authentication.

Manual test execution from Manual number#

Manual number can be used to measure manual test execution.

Mandatory parameters#

Number. The default value is:

0.

Many parameters from Manual number#

Manual number can be used to measure many parameters.

Mandatory parameters#

Number. The default value is:

0.

Many parameters from SonarQube#

SonarQube can be used to measure many parameters.

Mandatory parameters#

Project key. The project key can be found by opening the project in SonarQube and looking at the bottom of the grey column on the right.

URL. URL of the SonarQube instance, with port if necessary, but without path. For example, ‘https://sonarcloud.io’.

Optional parameters#

Branch (only supported by commercial SonarQube editions). The default value is:

master.Private token.

Configurations:

Rules used to detect units with many parameters:

c:S107

cpp:S107

csharpsquid:S107

csharpsquid:S2436

flex:S107

go:S107

java:S107

javascript:S107

kotlin:S107

objc:S107

php:S107

python:S107

ruby:S107

scala:S107

swift:S107

tsql:S107

typescript:S107

vbnet:S107

Merge requests from Azure DevOps Server#

Azure DevOps Server can be used to measure merge requests.

Mandatory parameters#

URL including organization and project. URL of the Azure DevOps instance, with port if necessary, and with organization and project. For example: ‘https://dev.azure.com/{organization}/{project}’.

Optional parameters#

Merge request states. Limit which merge request states to count. This parameter is multiple choice. Possible states are:

abandoned,active,completed,not set. The default value is: all states.Minimum number of upvotes. Only count merge requests with fewer than the minimum number of upvotes. The default value is:

0.Private token.

Repository (name or id).

Target branches to include (regular expressions or branch names).

Merge requests from GitLab#

GitLab can be used to measure merge requests.

Mandatory parameters#

GitLab instance URL. URL of the GitLab instance, with port if necessary, but without path. For example, ‘https://gitlab.com’.

Project (name with namespace or id).

Optional parameters#

Approval states to include (requires GitLab Premium). This parameter is multiple choice. Possible approval states are:

approved,not approved,unknown. The default value is: all approval states.Merge request states. Limit which merge request states to count. This parameter is multiple choice. Possible states are:

closed,locked,merged,opened. The default value is: all states.Minimum number of upvotes. Only count merge requests with fewer than the minimum number of upvotes. The default value is:

0.Private token (with read_api scope).

Target branches to include (regular expressions or branch names).

Merge requests from Manual number#

Manual number can be used to measure merge requests.

Mandatory parameters#

Number. The default value is:

0.

Metrics from Manual number#

Manual number can be used to measure metrics.

Mandatory parameters#

Number. The default value is:

0.

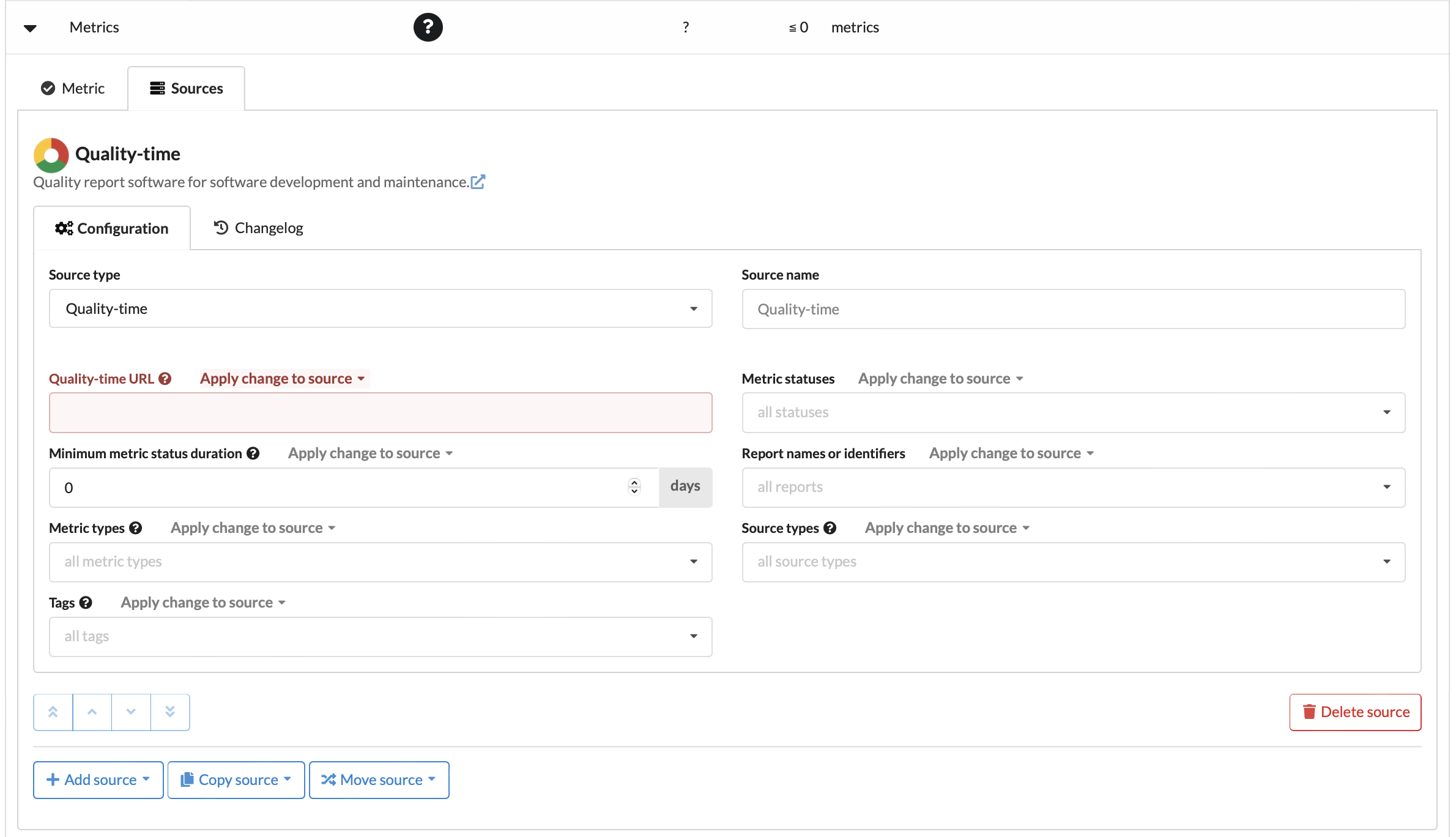

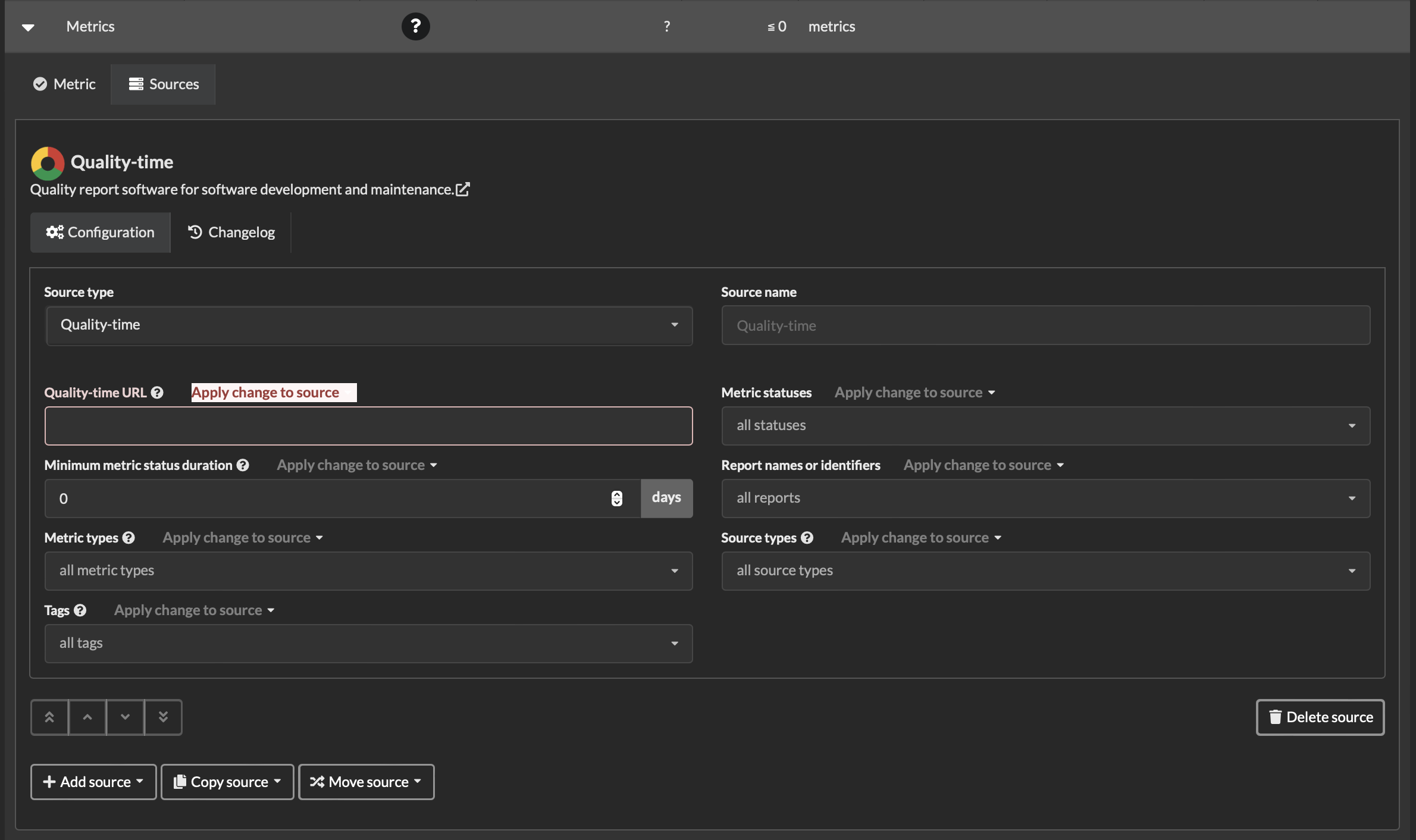

Metrics from Quality-time#

Quality-time can be used to measure metrics.

Mandatory parameters#

Quality-time URL. URL of the Quality-time instance, with port if necessary, but without path. For example, ‘https://quality-time.example.org’.

Optional parameters#

Metric statuses. This parameter is multiple choice. Possible metric statuses are:

informative (blue),near target met (yellow),target met (green),target not met (red),technical debt target met (grey),unknown (white). The default value is: all metric statuses.Metric types. If provided, only count metrics with the selected metric types. This parameter is multiple choice. Possible metric types are:

Average issue lead time,CI-pipeline duration,Change failure rate,Commented out code,Complex units,Dependencies,Duplicated lines,Failed CI-jobs,Issues,Job runs within time period,Long units,Manual test duration,Manual test execution,Many parameters,Merge requests,Metrics,Missing metrics,Performancetest duration,Performancetest stability,Scalability,Security warnings,Sentiment,Size (LOC),Slow transactions,Software version,Source up-to-dateness,Source version,Suppressed violations,Test branch coverage,Test cases,Test line coverage,Tests,Time remaining,Todo and fixme comments,Unmerged branches,Unused CI-jobs,User story points,Velocity,Violation remediation effort,Violations. The default value is: all metric types.Minimum metric status duration. Only count metrics whose status has not changed for the given number of days. The default value is:

0.Report names or identifiers.

Source types. If provided, only count metrics with one or more sources of the selected source types. This parameter is multiple choice. Possible source types are:

Anchore Jenkins plugin,Anchore,Axe CSV,Axe HTML reporter,Axe-core,Azure DevOps Server,Bandit,Calendar date,Cargo Audit,Checkmarx CxSAST,Cobertura Jenkins plugin,Cobertura,Composer,Dependency-Track,Gatling,GitLab,Harbor JSON,Harbor,JMeter CSV,JMeter JSON,JSON file with security warnings,JUnit XML report,JaCoCo Jenkins plugin,JaCoCo,Jenkins test report,Jenkins,Jira,Manual number,NCover,OJAudit,OWASP Dependency-Check,OWASP ZAP,OpenVAS,Performancetest-runner,Pyupio Safety,Quality-time,Robot Framework Jenkins plugin,Robot Framework,SARIF,Snyk,SonarQube,TestNG,Trello,Trivy JSON,cloc,npm,pip. The default value is: all source types.Tags. If provided, only count metrics with one ore more of the given tags.

Missing metrics from Manual number#

Manual number can be used to measure missing metrics.

Mandatory parameters#

Number. The default value is:

0.

Missing metrics from Quality-time#

Quality-time can be used to measure missing metrics.

Mandatory parameters#

Quality-time URL. URL of the Quality-time instance, with port if necessary, but without path. For example, ‘https://quality-time.example.org’.

Optional parameters#

Report names or identifiers.

Subjects to ignore (subject names or identifiers). The Quality-time missing metrics collector will ignore metrics that are missing in the list of subjects to ignore.

Performancetest duration from Gatling#

Gatling can be used to measure performancetest duration.

Mandatory parameters#

URL to a Gatling report in HTML format or to a zip with Gatling reports in HTML format.

Optional parameters#

Password for basic authentication.

Private token.

Username for basic authentication.

Performancetest duration from JMeter CSV#

JMeter CSV can be used to measure performancetest duration.

Mandatory parameters#

URL to a JMeter report in CSV format or to a zip with JMeter reports in CSV format.

Optional parameters#

Password for basic authentication.

Private token.

URL to a JMeter report in a human readable format. If provided, users clicking the source URL will visit this URL instead of the JMeter report in CSV format.

Username for basic authentication.

Performancetest duration from Manual number#

Manual number can be used to measure performancetest duration.

Mandatory parameters#

Number. The default value is:

0.

Performancetest duration from Performancetest-runner#

Performancetest-runner can be used to measure performancetest duration.

Mandatory parameters#

URL to a Performancetest-runner report in HTML format or to a zip with Performancetest-runner reports in HTML format.

Optional parameters#

Password for basic authentication.

Private token.

Username for basic authentication.

Performancetest stability from Manual number#

Manual number can be used to measure performancetest stability.

Mandatory parameters#

Number. The default value is:

0.

Performancetest stability from Performancetest-runner#

Performancetest-runner can be used to measure performancetest stability.

Mandatory parameters#

URL to a Performancetest-runner report in HTML format or to a zip with Performancetest-runner reports in HTML format.

Optional parameters#

Password for basic authentication.

Private token.

Username for basic authentication.

CI-pipeline duration from Manual number#

Manual number can be used to measure ci-pipeline duration.

Mandatory parameters#

Number. The default value is:

0.

CI-pipeline duration from GitLab#

GitLab can be used to measure ci-pipeline duration.

Mandatory parameters#

GitLab instance URL. URL of the GitLab instance, with port if necessary, but without path. For example, ‘https://gitlab.com’.

Project (name with namespace or id).

Optional parameters#

Branches (regular expressions or branch names).

Number of days to look back for selecting pipelines. The default value is:

7.Pipeline statuses to include. This parameter is multiple choice. Possible pipeline statuses are:

canceled,created,failed,manual,pending,preparing,running,scheduled,skipped,success,waiting for resource. The default value is: all pipeline statuses.Pipeline triggers to include. This parameter is multiple choice. Possible pipeline triggers are:

api,chat,external pull request event,external,merge request event,ondemand DAST scan,ondemand DAST validation,parent pipeline,pipeline,push,schedule,trigger,web-IDE,web. The default value is: all pipeline triggers.Private token (with read_api scope).

Violation remediation effort from Manual number#

Manual number can be used to measure violation remediation effort.

Mandatory parameters#

Number. The default value is:

0.

Violation remediation effort from SonarQube#

SonarQube can be used to measure violation remediation effort.

Mandatory parameters#

Project key. The project key can be found by opening the project in SonarQube and looking at the bottom of the grey column on the right.

URL. URL of the SonarQube instance, with port if necessary, but without path. For example, ‘https://sonarcloud.io’.

Optional parameters#

Branch (only supported by commercial SonarQube editions). The default value is:

master.Private token.

Types of effort. This parameter is multiple choice. Possible effort types are:

effort to fix all bug issues,effort to fix all code smells,effort to fix all vulnerabilities. The default value is: all effort types.

Scalability from Manual number#

Manual number can be used to measure scalability.

Mandatory parameters#

Number. The default value is:

0.

Scalability from Performancetest-runner#

Performancetest-runner can be used to measure scalability.

Mandatory parameters#

URL to a Performancetest-runner report in HTML format or to a zip with Performancetest-runner reports in HTML format.

Optional parameters#

Password for basic authentication.

Private token.

Username for basic authentication.

Security warnings from Anchore#

Anchore can be used to measure security warnings.

Mandatory parameters#

URL to an Anchore vulnerability report in JSON format or to a zip with Anchore vulnerability reports in JSON format.

Optional parameters#

Password for basic authentication.

Private token.

Severities. If provided, only count security warnings with the selected severities. This parameter is multiple choice. Possible severities are:

Critical,High,Low,Medium,Negligible,Unknown. The default value is: all severities.URL to an Anchore vulnerability report in a human readable format. If provided, users clicking the source URL will visit this URL instead of the Anchore vulnerability report in JSON format.

Username for basic authentication.

Security warnings from Anchore Jenkins plugin#

Anchore Jenkins plugin can be used to measure security warnings.

To authorize Quality-time for (non-public resources in) Jenkins, you can either use a username and password or a username and API token. Note that, unlike other sources, when using the API token Jenkins also requires the username to which the token belongs.

Mandatory parameters#

URL to Jenkins job. URL to a Jenkins job with an Anchore report generated by the Anchore plugin. For example, ‘https://jenkins.example.org/job/anchore’ or https://jenkins.example.org/job/anchore/job/main’ in case of a pipeline job.

Optional parameters#

Password or API token for basic authentication.

Severities. If provided, only count security warnings with the selected severities. This parameter is multiple choice. Possible severities are:

Critical,High,Low,Medium,Negligible,Unknown. The default value is: all severities.Username for basic authentication.

Security warnings from Bandit#

Bandit can be used to measure security warnings.

Mandatory parameters#

URL to a Bandit report in JSON format or to a zip with Bandit reports in JSON format.

Optional parameters#

Confidence levels. If provided, only count security warnings with the selected confidence levels. This parameter is multiple choice. Possible confidence levels are:

high,low,medium. The default value is: all confidence levels.Password for basic authentication.

Private token.

Severities. If provided, only count security warnings with the selected severities. This parameter is multiple choice. Possible severities are:

high,low,medium. The default value is: all severities.URL to a Bandit report in a human readable format. If provided, users clicking the source URL will visit this URL instead of the Bandit report in JSON format.

Username for basic authentication.

Security warnings from Cargo Audit#

Cargo Audit can be used to measure security warnings.

Mandatory parameters#

URL to a Cargo Audit report in JSON format or to a zip with Cargo Audit reports in JSON format.

Optional parameters#

Password for basic authentication.

Private token.